This is probably going to seem extremely basic to some people, but I think it’s a very useful technique that many SQL developers might not know about.

You can use Excel as a quick and precise ETL tool. Not for 10GB data files. Not for data that’s not already in Excel. But when you have data in Excel – especially if it’s messy – and you want to get in into SQL Server quickly, there’s a good way of doing that.

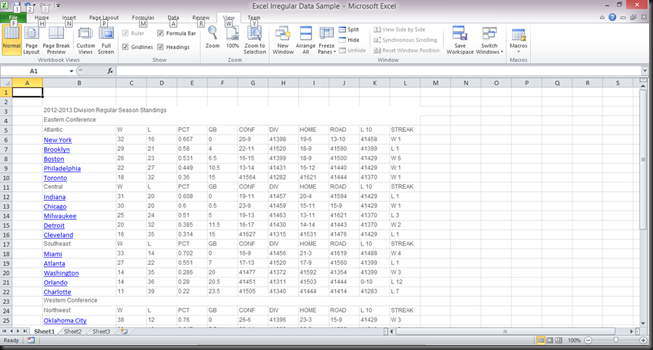

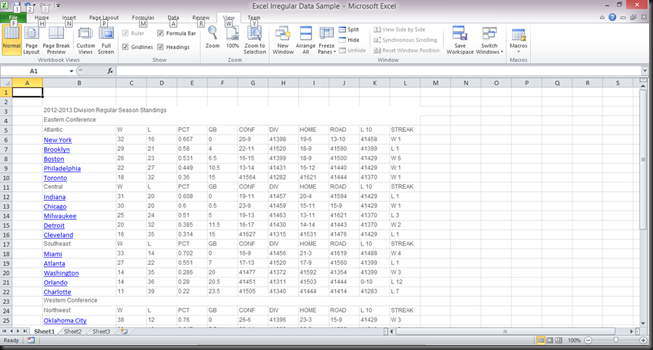

Let’s start with some messy Excel data. Here are the current NBA standings that I copied from the NBA’s website.

Now, the data isn’t hideous. It’s organized in a consistent manner. It’s just not normalized. The technique I’m going to describe works on data in all kinds of formats.

Create the Table

The first thing I’m going to do is define the table in SQL. I’m going to write the create table statement in Excel, so that my entire script will be in one place. So in cell M2, I’m going to write:

create table dbo.NbaStandings (Id integer identity,TimePeriod varchar(100), Conference varchar(50), Division varchar(50), Team varchar(50), Wins integer, Losses integer, WinPercent decimal(4, 3), GamesBack decimal(10, 2), ConfRecord varchar(10), DivRecord varchar(10), HomeRecord varchar(10), RoadRecord varchar(10), Last10 varchar(5), Streak varchar(5)); The column definitions are derived from the data, of course. That’s the easy part.

Insert the Data, Part 1

Next I’m going to create the insert statements in Excel, referencing the data in the spreadsheet. In cell M6, I’m going to write:

="insert into dbo.NbaStandings (TimePeriod, Conference, Division, Team, Wins, Losses, WinPercent, GamesBack, ConfRecord, DivRecord, HomeRecord, RoadRecord, Last10, Streak) values (" But I'm not ready to write more just yet. SQL Server string values have to be enclosed in single quotes. Also, when you concatenate cell references in an Excel formula, you have to use double quotes. I could write the rest of the insert statement that way, but that’s going to be one cumbersome, unreadable formula. Some Excel macros will make it much easier to write.

Write the Macros (or just use mine)

Here are the macros I’m going to create in the Excel VBA editor. I recommend keeping these macros in your personal macro workbook, so you don’t have to add them to every workbook you want to use them in.

This macro will escape single quotes in in a string, and enclose a string in single quotes:

Public Function SqlEncode(TheString) As String

Public Function SqlEncode(TheString) As String

Dim ReturnValue As String

ReturnValue = TheString

ReturnValue = Replace(ReturnValue, Chr(39), Chr(39) & Chr(39)) 'escape apostrophe

ReturnValue = Chr(39) & ReturnValue & Chr(39) 'enclose in apostrophes

SqlEncode = ReturnValue

End Function

This macro will take a single cell, and apply the macro above to its value, optionally adding a comma:

Public Function SqlValue(TheRange As Range, Optional IncludeComma As Boolean = True) As String

Dim ReturnValue As String

ReturnValue = ReturnValue & SqlEncode(TheRange.Text)

If IncludeComma Then ReturnValue = ReturnValue & ", "

SqlValue = ReturnValue

End Function

And this macro will take a range of cells, apply the SqlEncode macro to all of them, and concatenate them with commas:

Public Function SqlValues(TheRange As Range) As String

Dim TheCell As Range

Dim ReturnValue As String

Dim counter As Integer

counter = 0

For Each TheCell In TheRange.Cells

If counter > 0 Then ReturnValue = ReturnValue & ", "

ReturnValue = ReturnValue & SqlEncode(TheCell.Text)

counter = counter + 1

Next

SqlValues = ReturnValue

End Function

With those macros created, I’m ready for the next step.

Insert the Data, Part 2

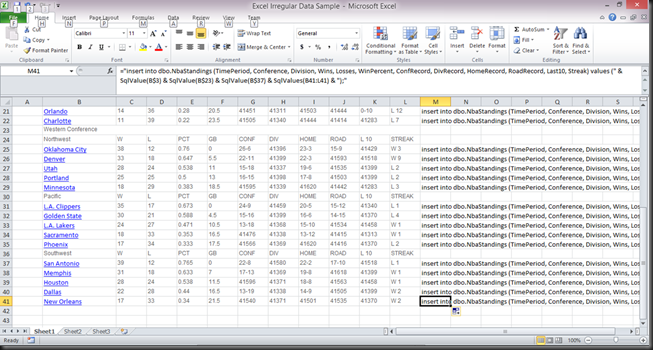

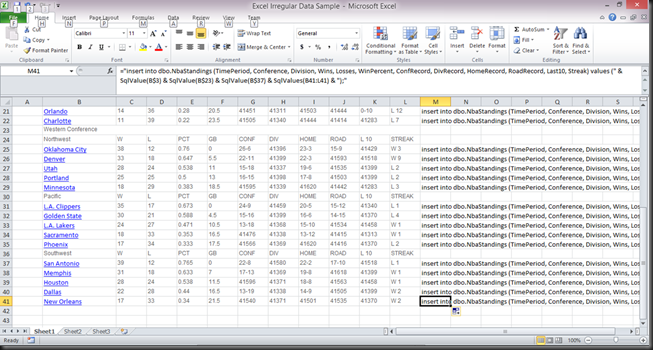

The first real record on the spreadsheet is on line 6, but the values in the three rows above will also be part of the record. This is where the data not being normalized makes things messy. Here’s my continued formula:

="insert into dbo.NbaStandings (TimePeriod, Conference, Division, Team, Wins, Losses, WinPercent, GamesBack, ConfRecord, DivRecord, HomeRecord, RoadRecord, Last10, Streak) values (" & SqlValue(B$3) & SqlValue(B$4) & SqlValue(B$5) Notice that I made the row reference for those three values absolute, because I don’t want them to change when I copy the formula to new rows.

The next part of the formula uses the data on the same row my formula is on, so I can use the SqlValues macro to take care of them all:

="insert into dbo.NbaStandings (TimePeriod, Conference, Division, Team, Wins, Losses, WinPercent, GamesBack, ConfRecord, DivRecord, HomeRecord, RoadRecord, Last10, Streak) values (" & SqlValue(B$3) & SqlValue(B$4) & SqlValue(B$5) & SqlValues(B6:L6) & ");" My formula is now complete. Here's the resulting TSQL:

insert into dbo.NbaStandings (TimePeriod, Conference, Division, Team, Wins, Losses, WinPercent, GamesBack, ConfRecord, DivRecord, HomeRecord, RoadRecord, Last10, Streak) values ('2012-2013 Division Regular Season Standings', 'Eastern Conference', 'Atlantic', 'New York', '32', '16', '0.667', '0', '20-9', '41398', '19-6', '13-10', '41458', 'W 1'); Now I can go ahead and copy the formula into all of the other data rows on the spreadsheet:

I have to change the formula’s references to the conference and division cells several times when copying the formula, because this data set isn’t normalized, as previously pointed out. I could have used an OFFSET formula in Excel to get around having to do that, but I wanted to keep this example relatively simple.

Execute the Script

The entire script to create and populate the table in now in column M of my spreadsheet. I’m going to go ahead and copy that column into new query window in SQL Server:

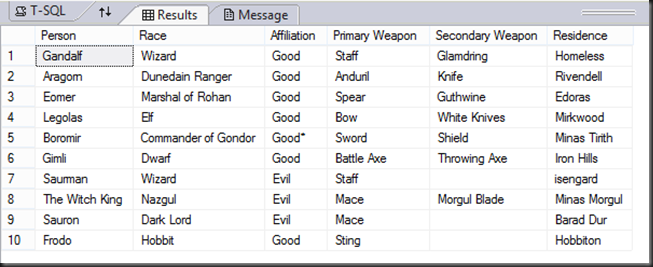

Once I execute the script, the data is now in a SQL Server table:

Conclusion

Given how de-normalized that data was, this wouldn’t have been possible with a standard ETL engine such as SSIS, without first normalizing the data in Excel.

At my job, I get a lot of data in a format like this or worse, so I’ve found this technique very helpful over the years. I hope others will fine it helpful as well.